On my blog I’m documenting some of the most interesting issues and possible solutions I’ve stumbled upon while working on projects.

This is the multi-page printable view of this section. Click here to print.

Blog Overview

- Demo: A simple ChatGPT integration with Laravel

- Solution Concept: Mastering the journey to the Cloud

- Demo: Multitenancy with Spring Boot and JPA

- Solution Concept: docToolchain Integration

- Solution Concept: GitLab-CI Data Pipelines

- Demo: Spring Boot Graceful Shutdown

- Solution Concept: SSO for SPA using OAuth2

Demo: A simple ChatGPT integration with Laravel

GitHub Repo

Description

Check out the Source Code at GitHub and look at the README for more details and instructions on how to run the demo.

Solution Concept: Mastering the journey to the Cloud

Solution concept and PoC for Cloud Migration

Project Context and Goals

- Establish a DevOps workflow and best practices for

- Migration (and modernization) of existing Web apps to the Cloud

- Development of new Web apps for the Cloud - “Cloud first approach”

- Establish an end-to-end DevOps environment that supports Continuous Integration/Continuous Deployment (CI/CD)

- CI/CD Pipeline to build, test and deploy in the Cloud

- Evaluate GitHub Actions, AWS CodeBuild

- Evaluate Rolling Deployment vs Blue-Green Deployment

- Use Cloud native technologies and tools

- no vendor lock-in

- Proof of concept

What is Cloud native?

Cloud native technologies empower organizations bo build and run scalable applications in modern, dynamic environments such as public, private, and hybrid clouds. Containers, service meshes, microservices, immutable infrastructure, and declarative APIs exemplify this approach.

These techniques enable loosely coupled systems that are resilient, manageable, and observable. Combined with robust automation, they allow engineers to make high-impact changes frequently and predictably with minimal toil.*

Cloud Migration Strategies

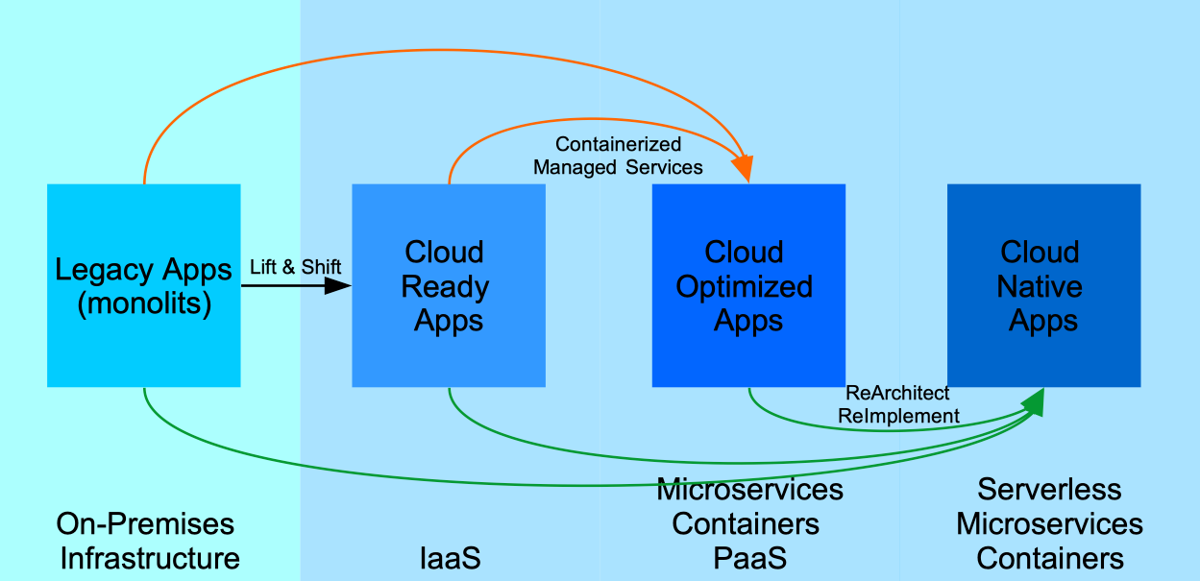

Strategies for migrating legacy (monolitic) apps

Cloud Ready Migration (aka “Lift-and-Shift” or “Rehost”)

- No code changes

- IaaS model

- Cloud providers usually provide migration tools like the AWS MigrationHub or Azure Migrate

- Quick and therefore cheap, yet does not levarege most of the benefits of Cloud Computing

Cloud Optimized Migration

- Requires minimal code changes

- PaaS model

- Containerization of the App and deployment to a Container Orchestrator

- Decomposition into Microservices

- Data-Driven Microservices (CRUD)

- Consuming Cloud managed services like Databases, Caching, Monitoring, Message Queues

- Deployment optimizations (CI/CD) that enable key cloud services without changing the core architecture

- Higher costs and overhead, yet better scalability and performance, levareges most of the benefits of Cloud Computing

Cloud Native Migration

- Requires rearchitecting and rewriting code

- Microservices Architecture (Decomposition into Microservices and Containerization)

- Data-Driven Microservices (CRUD)

- Domain-Driven Microservices (CQRS, Event Sourcing)

- Serverless Architecture

- Event-Driven Architecture

- API-Management, API-Gateway

- Highest costs, yet future-proof investment (fine-grained scalability, improved system resiliency, performance and operations), all benefits of Cloud Computing

References

- 6 Strategies for Migrating Applications to the Cloud

- Introduction to Cloud-native applications

- Modernizing legacy apps

Proof of Concept Goals

- Migrate an existing Web-based application to the Cloud using the 2. Migration Strategy - “Cloud Optimized Migration”

- Produce a cloud native reference application on AWS Cloud to showcase using Laravel, Docker, GitHub, Cloud managed services and CI/CD pipeline to build a simplistic minimal php based application.

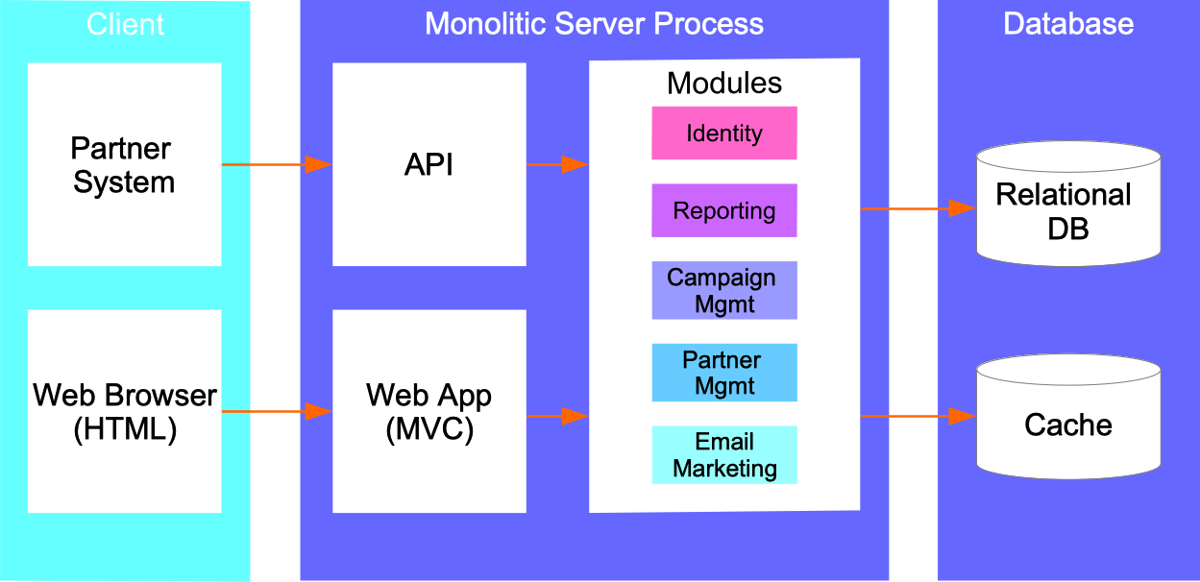

Migration of a (monolitic) Web App

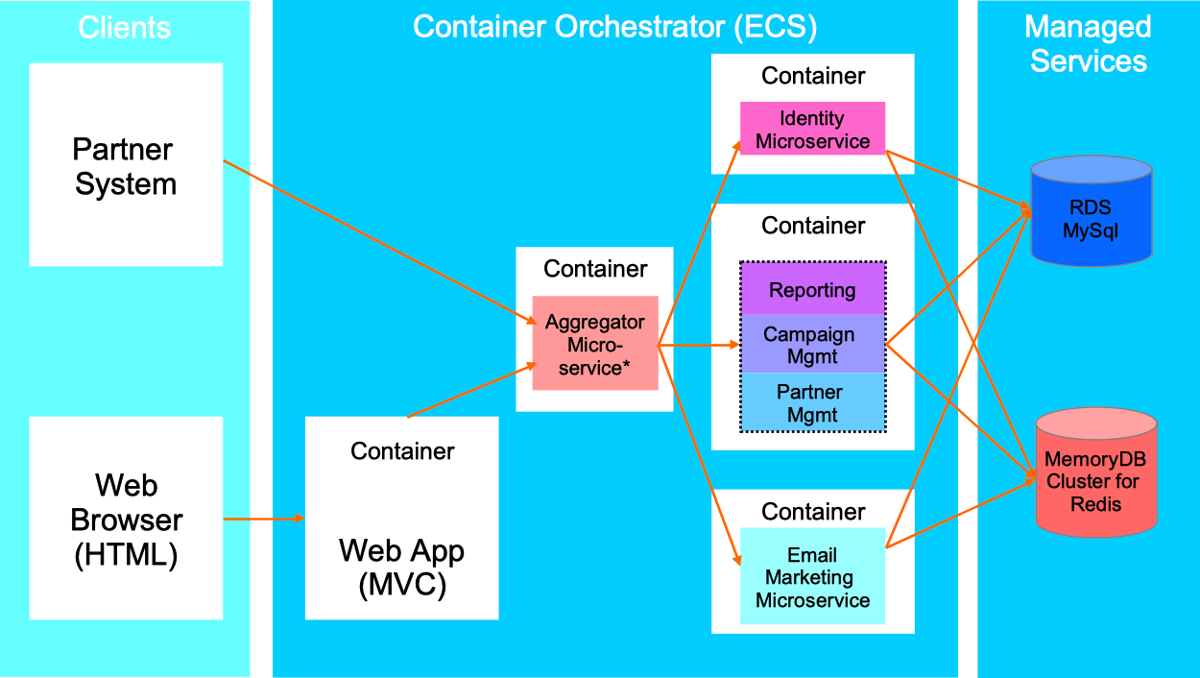

Architecture Overview Monolitic Web App Architecture, deployt on-premises

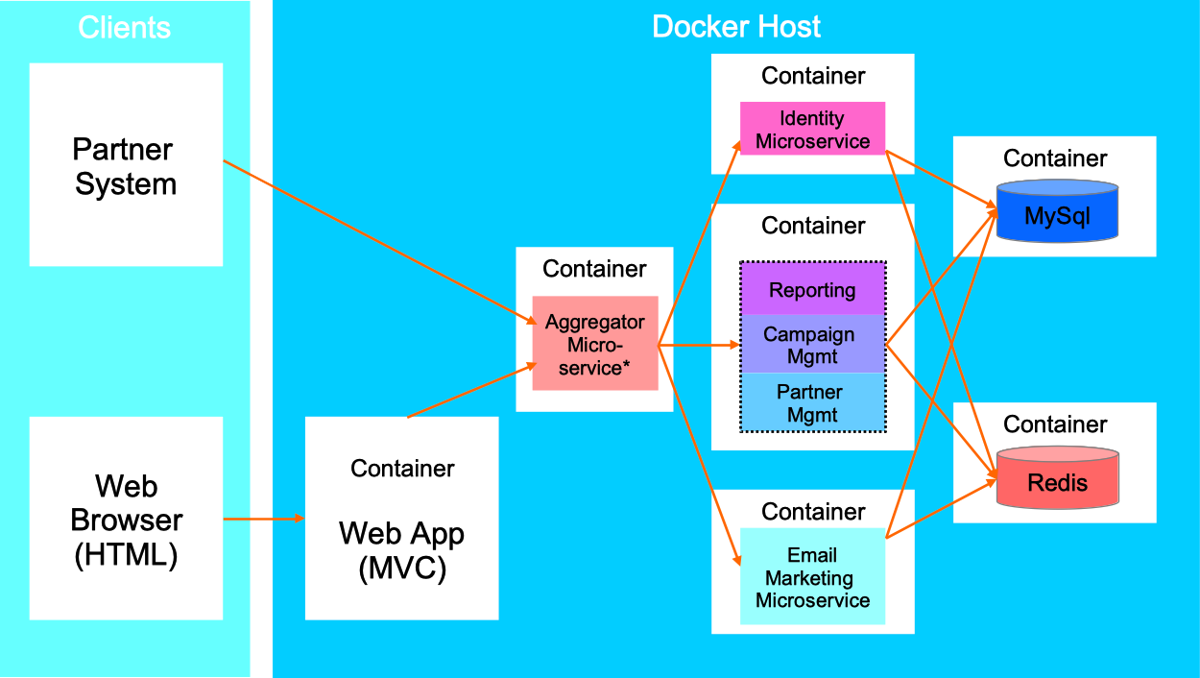

Cloud Optimized Architecture - Development/Staging Environment Cloud Optimized Web App Architecture (local development and staging)

*Service Aggregator Pattern / API Gateway

Cloud Optimized Architecture - Production Environment Cloud Optimized Web App Architecture (production)

*Service Aggregator Pattern / API Gateway

Summary and Outlook

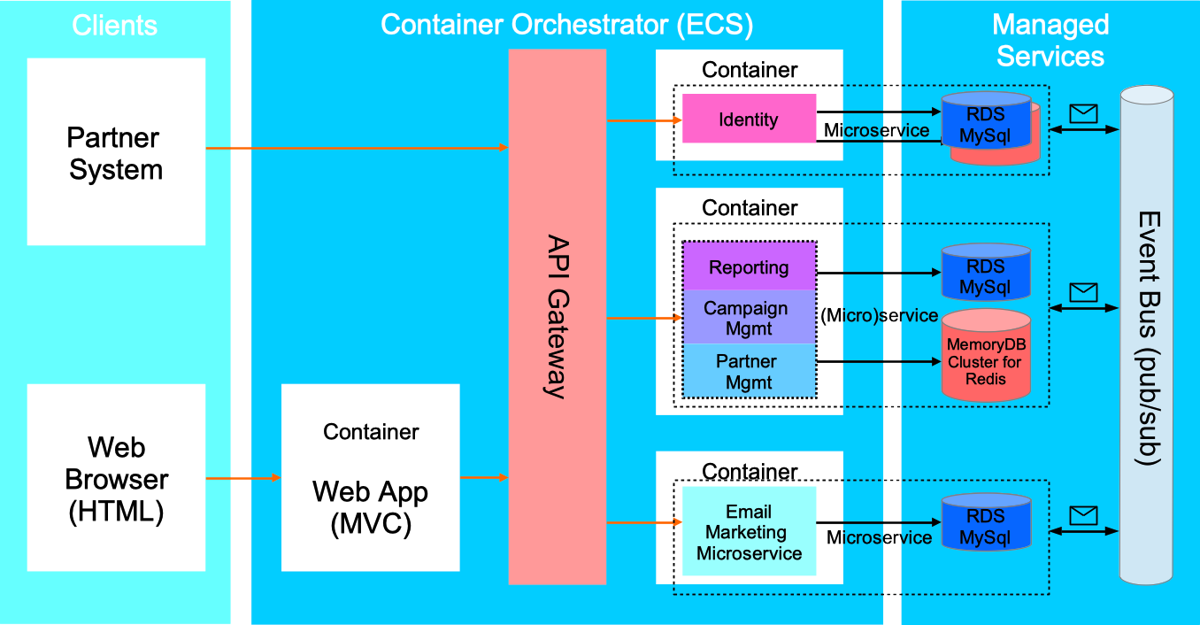

How would a Cloud-native architecture look like? Here are some recommendations.

Cloud-native Web App Architecture

Microservices, Event-Driven Architecture, API Gateway

- Topics not touched: Security, backup services, monitoring

- Should be required in the future that multiple front-end clients must be supported (SPA, mobile clients) and/or exposing many complex APIs

- Introduce an API Gateway or even API Management

- If appropriate - move from “single/shared database” model to “share nothing” model where each microservice owns its data

- Event Driven Architecture: use events for communication between microservices, in a decoupled, reliable and asynchronous manner

- For local development RabbitMQ can be used as a Message Broker

- In the AWS Cloud use a fully managed integration message broker - Amazon MQ (supports Active MQ and RabbitMQ)

- Serverless: consider using a single function where sufficient (not customer-facing, single operation) instead of developing and maintaining a full microservice

- Configure centralized logging - CloudWatch

Reference Web App

Info

Sources: AWS ECS Laravel Demo

Main focus: DevOps Workflow, CI/CD

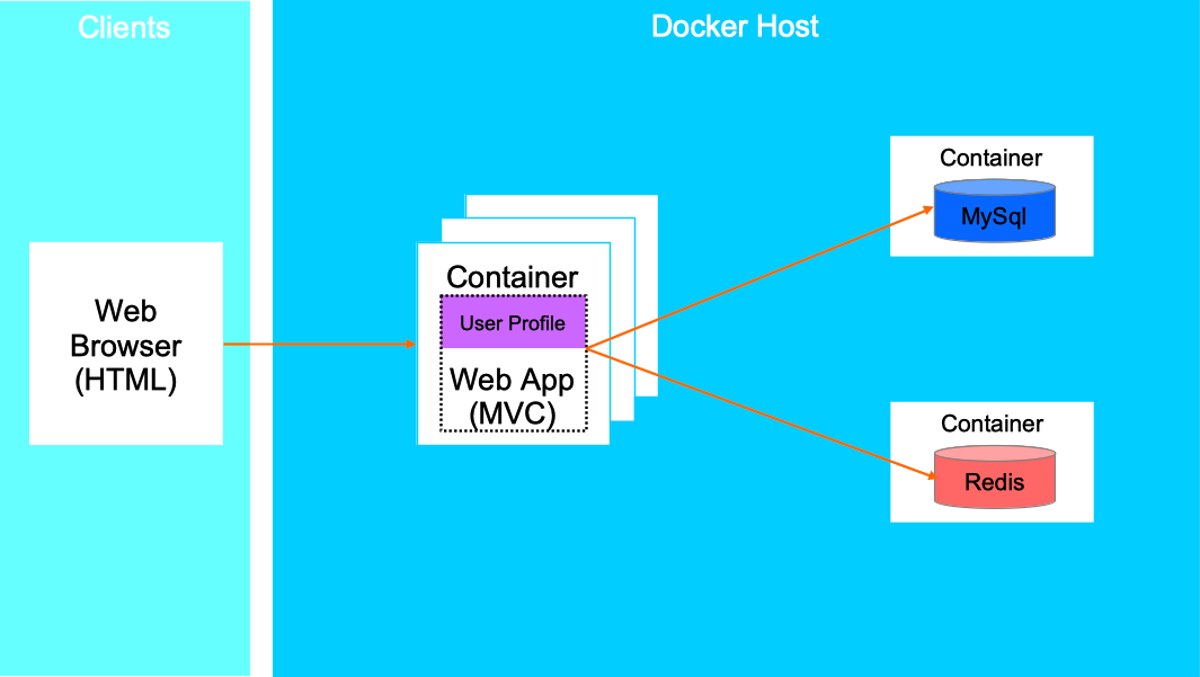

Cloud Optimized Reference Web App - Development/Staging Environment Cloud Optimized Reference Web App Architecture (local development and staging)

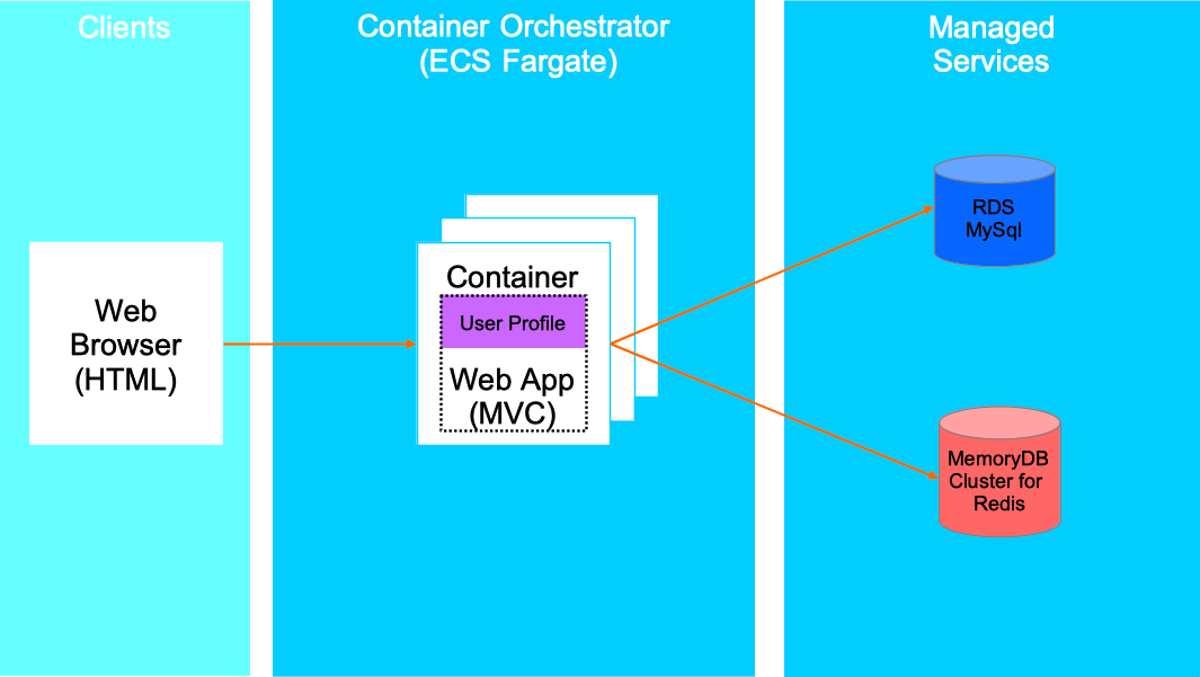

Cloud Optimized Reference Web App Architecture (production)

Basic Features

- Sign in and out

- Registration flow

- Password reset flows

- Review and Edit user’s profile

Non-Functional Requirements of the Reference App

- High availability

- (Optional) Scale out and in automatically to meet increased traffic

- Support an agile development process, including CI/CD

- For simplicity, support only traditional Web front ends (no SPA, no mobile clients)

- The design should support

- cross-platform development (no platform lock-in) and

- cross-platform hosting (no cloud vendor lock-in)

Evaluated and leveraged technologies and tools

- CI/CD: GitHub-Actions, CodeBuild

- Centralized configuration: S3 Service (storage, configuration)

- Secure Credentials: Secrets Manager (SSM)

- Container Registry: private registry (S3-based) / ECR

- Container Orchestrator and Clustering - ECS

- ECS with AWS Fargate launch type (serverless)

- Load Balancing (ELB)

- Data stores: MySql/MariaDB (RDS)

- Caching, Session management, Queueing (MemoryDB Cluster for Redis)

DevOps Workflow for Dockerized Web Apps

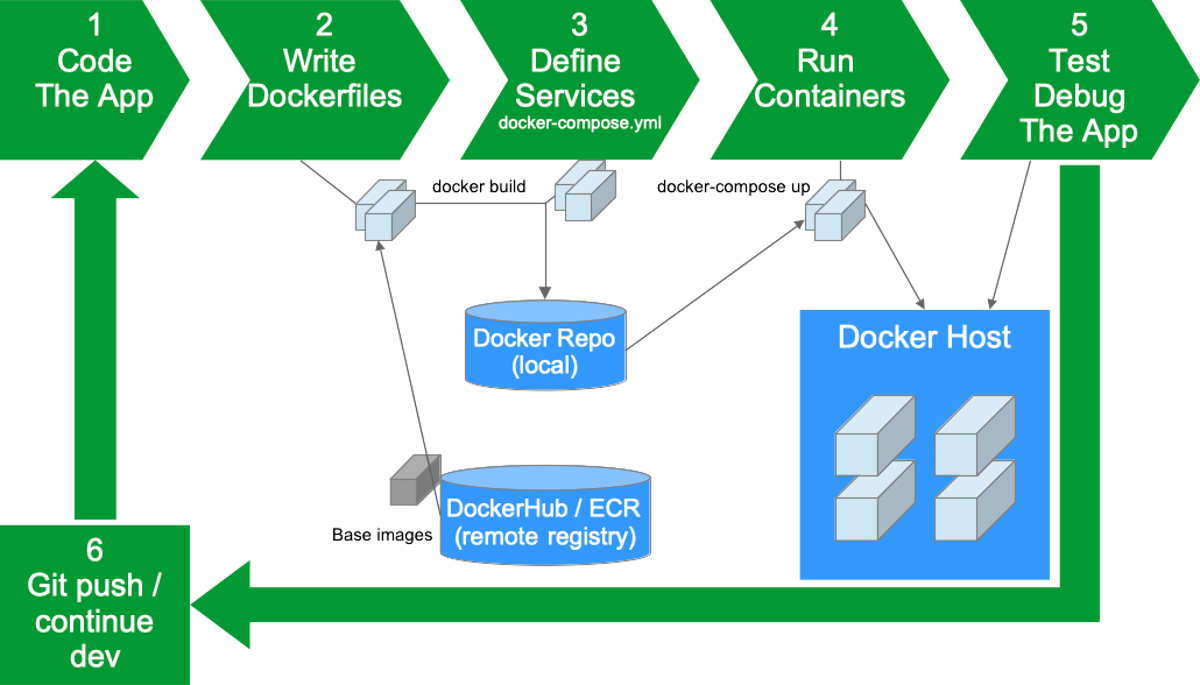

Local Development Workflow Local Dev Workflow

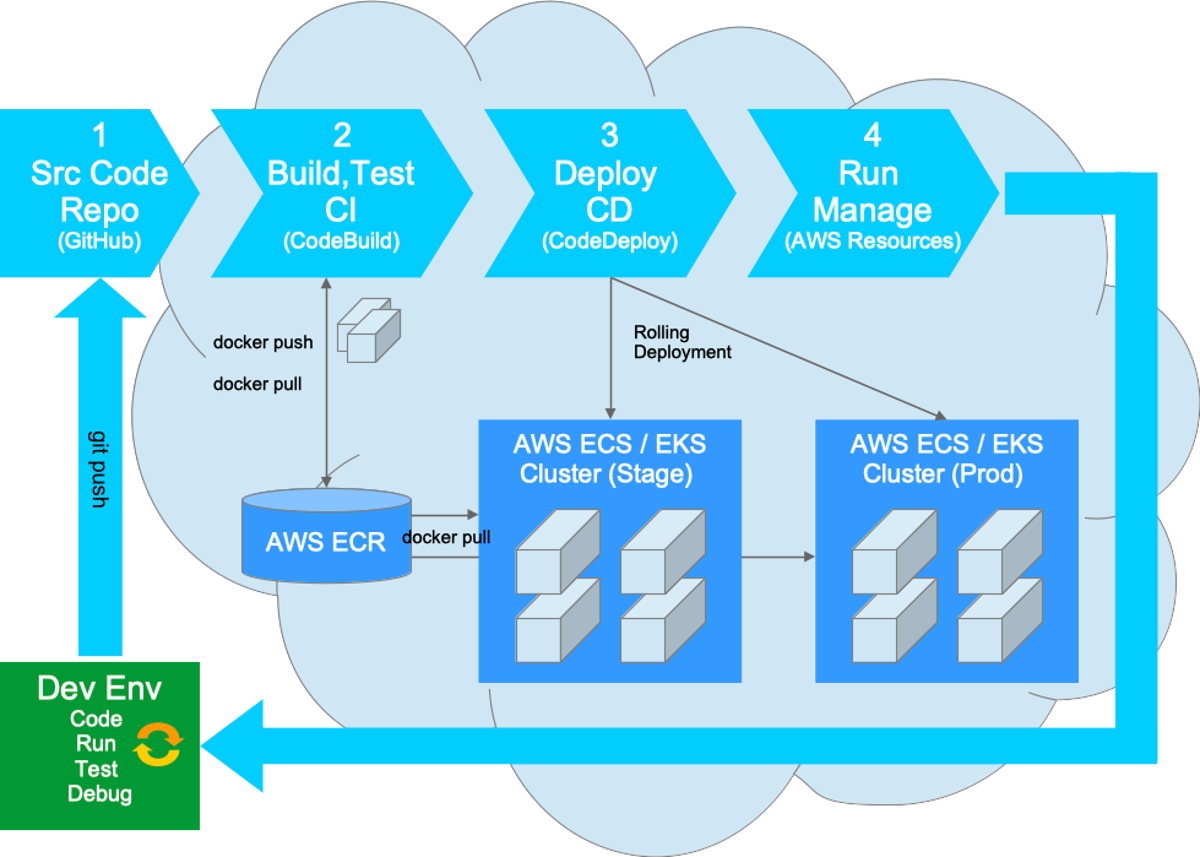

DevOps Workflow in the Cloud (AWS) DevOps Workflow on AWS

Demo: Multitenancy with Spring Boot and JPA

GitHub Repo

Description

Check out the Source Code at GitHub

Solution Concept: docToolchain Integration

GitHub Repo

Solution concept and PoC for docToolchain integration

Problem Description

Requirements and Context

SwaggerHub is the tool used for API design, development and documentation (e.g. Service Catalogue).

Confluence is the single source of truth for any type of documentation including software architecture documentation, design decisions, module specifications, developer how-tos, user guides, meeting notes, etc.

Goal: follow the Doc-as-Code approach for documentation

- Use the same tools and workflows as development teams

Goal: fully integrated API Management: connect Publisher Portal, Developer Portal and API Gateway

General Requirements:

- Publish all API related documentation (interfaces, implementations, OpenAP definitions) to Confluence.

- Sources should be automatically synced with Confluence

- Define page structure and page hierarchy for each document type: Separate spaces for general software architecture, software module specs and API definitions

- Integration in the build and deployment pipelines

Solution (PoC)

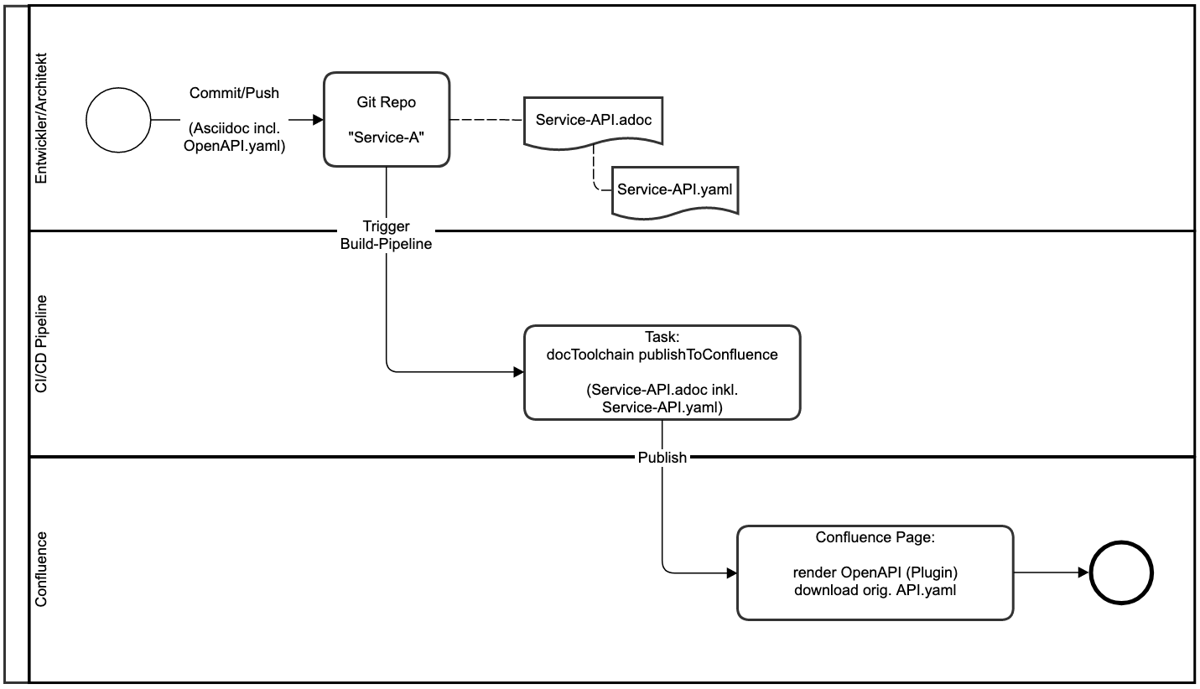

docToolchain integration in the software build pipeline

Solution Overview

Solution Concept: GitLab-CI Data Pipelines

Project News

Solution concept for Data Deployment and Data Test Pipelines and implementation based on GitLab-CI

Problem Description

What does ‘Data Deployment’ mean?

In a microservice landscape, data and software can be developed separately and only at runtime in one environment (stage) they are “linked” together. Such a separation is often predetermined by the organizational structure: different departments and teams are responsible for software development and data management. A CI/CD approach then inevitably also affects data management and requires that data is also deployable accordingly to the different stages, just like software. In such a context, a microservice consists of software and data that are developed independently of one another. Before going live, however, the microservice(s) must be extensively tested “as a whole” - i.e. software and data.

What does ‘Data Test’ mean?

In contrast to software testing, data testing focuses on the data. The software (e.g. a microservice) has already been sufficiently tested (through unit tests, automated integration tests, static code analysis, etc.). In the data test, a software artifact with the associated data is automatically tested. Depending on the objective, several so-called Quality Gates can be defined.

What is a ‘Data Depoyment Pipeline’?

A data deployment pipeline orchestrates the deployment and testing of software and data in different stages and defines several quality gates (the need for protection of the data plays an important role) up to the provision in production: the already built software (through a Software-Build-Pipeline), e.g. in the form of Docker Container, is “linked” to a new data version - for example via configuration, then tested and finally put into production.

Solution

Main Features of the Pipeline:

- Automatic rollback of all dependent microservices (dependency in terms of “shared data version”)

- when deployment failed (one or more service deployments) or

- when a Quality Gate did not pass (QGs can be configured as “allowed to fail”)

- Queueing - orchestration of parallel running pipelines

- at the time of developing the solution GitLab did not provide the feature (in contrast Jenkins provides this out-of-the-box), therefor we had to implement it

- Notification (Rocket.Chat) of ‘Start’ and ‘Finish’ (with details like ‘Success’, ‘Failure’ and ‘Cause’)

Tech Environment:

- AWS Cloud

- App and backend services run as Docker containers

- Data is provided via AWS S3 Service (S3 Object Store)

- S3-buckets separated by purpose (preview, live), with access only from specified environments, and in different accounts (prod, non-prod)

- Configuration of Data-Version via AWS SSM Parameters

- Kuberentes on AWS - EKS

- Docker on AWS - ECR

- Helm (Helmchart, Helmfile)

- GitOps - Continuous Deployment

- GitLab-CI - Continuous Integration

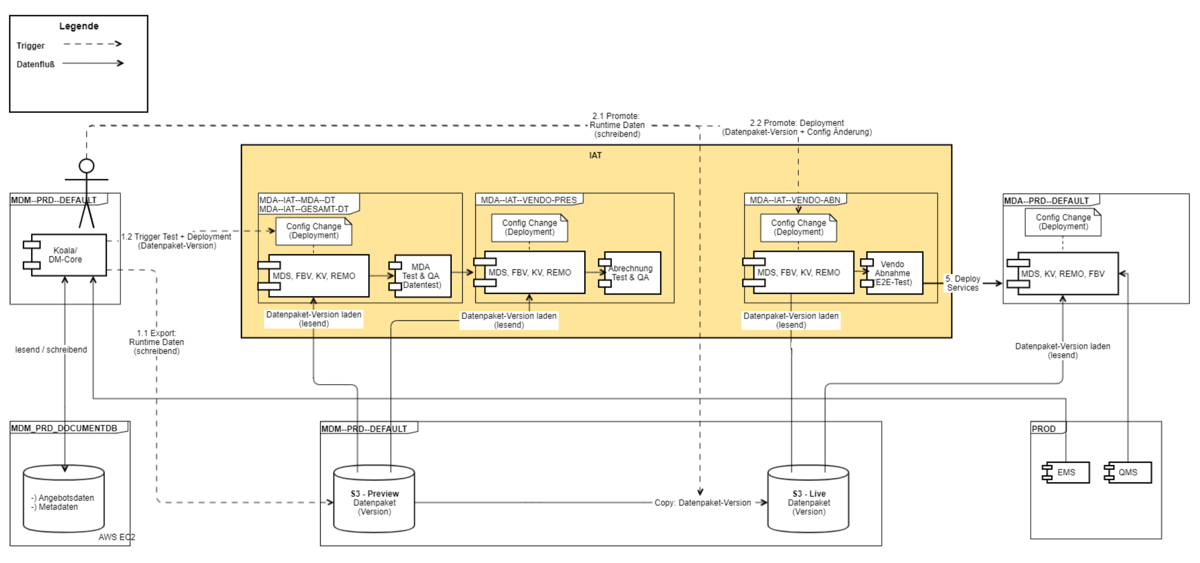

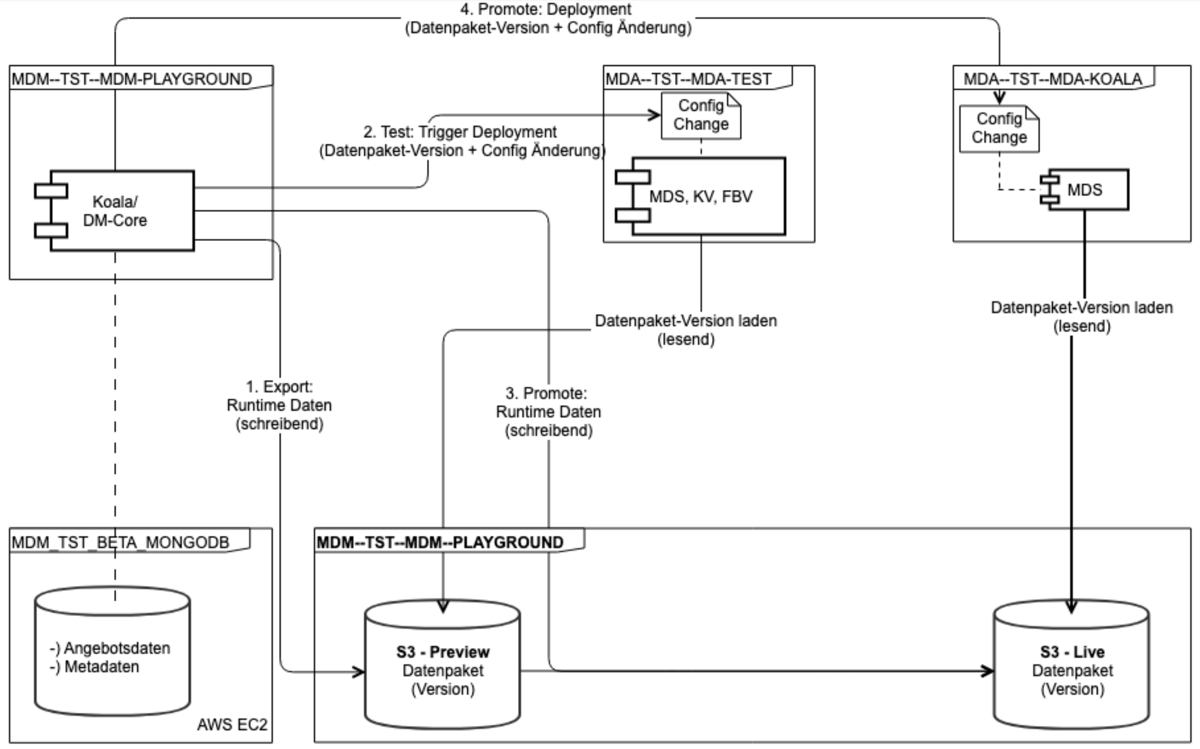

Architecture Overview

Deployment Schema and Runtime View (PRODUCTION):

Deployment Schema and Runtime View (PLAYGROUND):

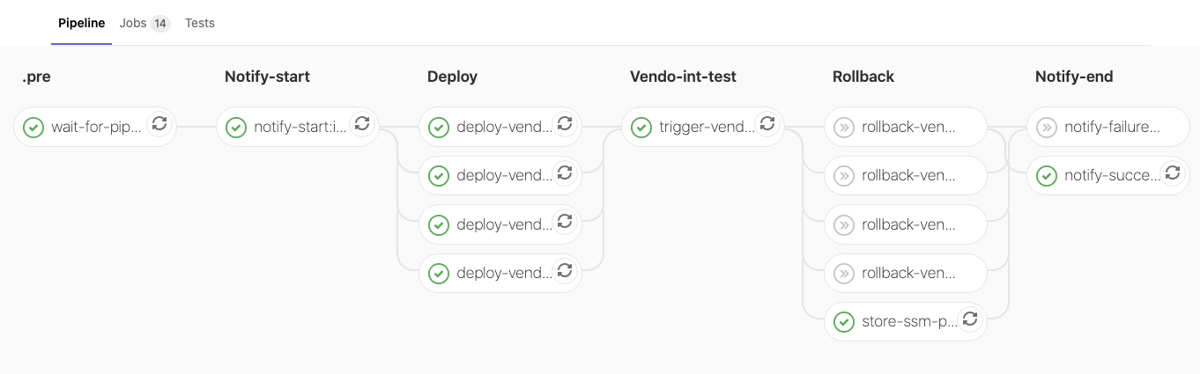

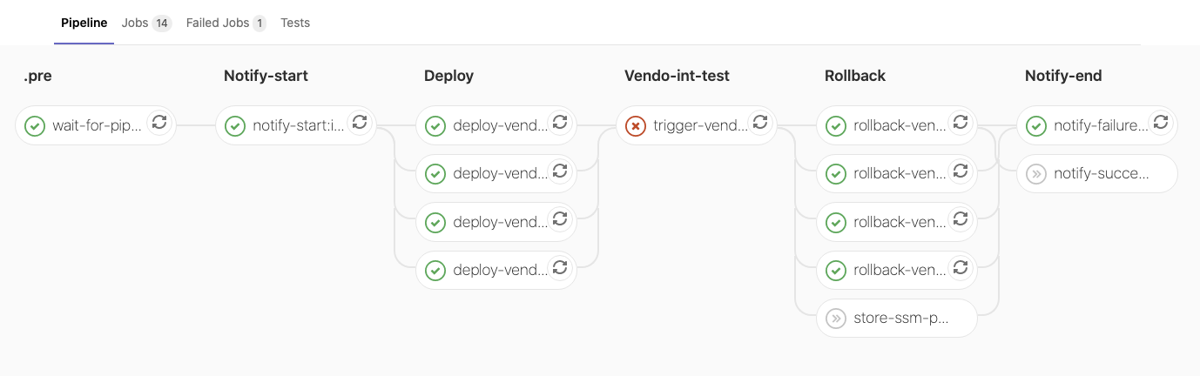

Examples of Data Pipelines in GitLab-CI

Int-Env - Successful run:

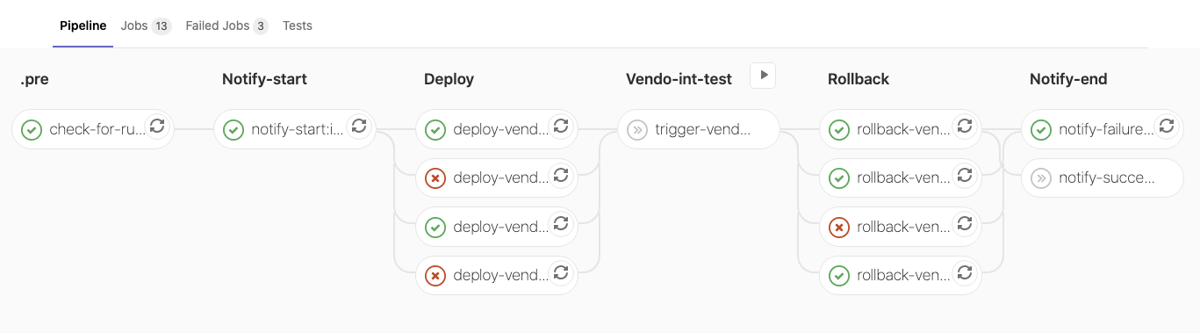

QG ‘Int-Test’ failed and Rollback:

Deployment failed and Rollback failed:

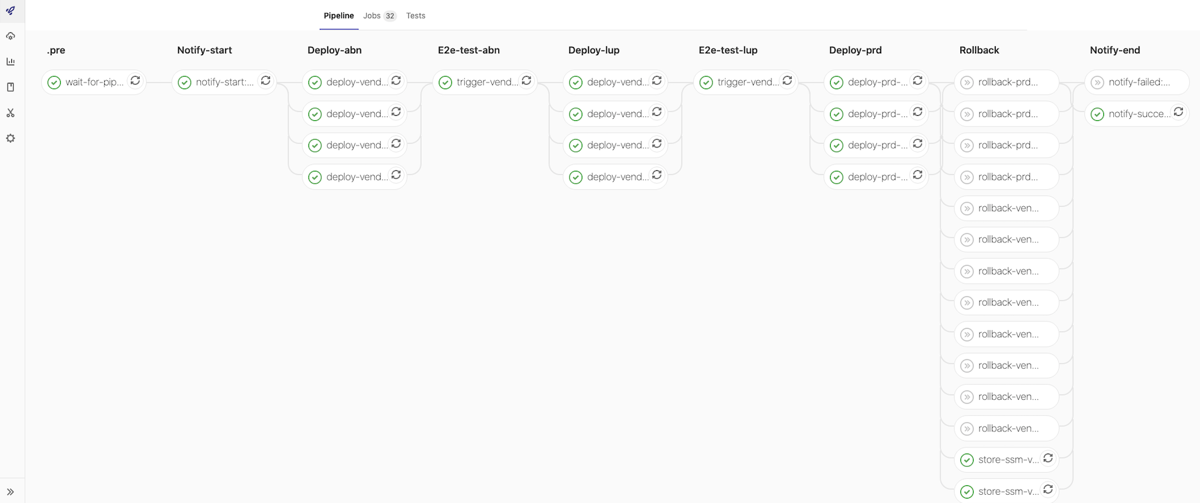

PreProd-Env Successful run:

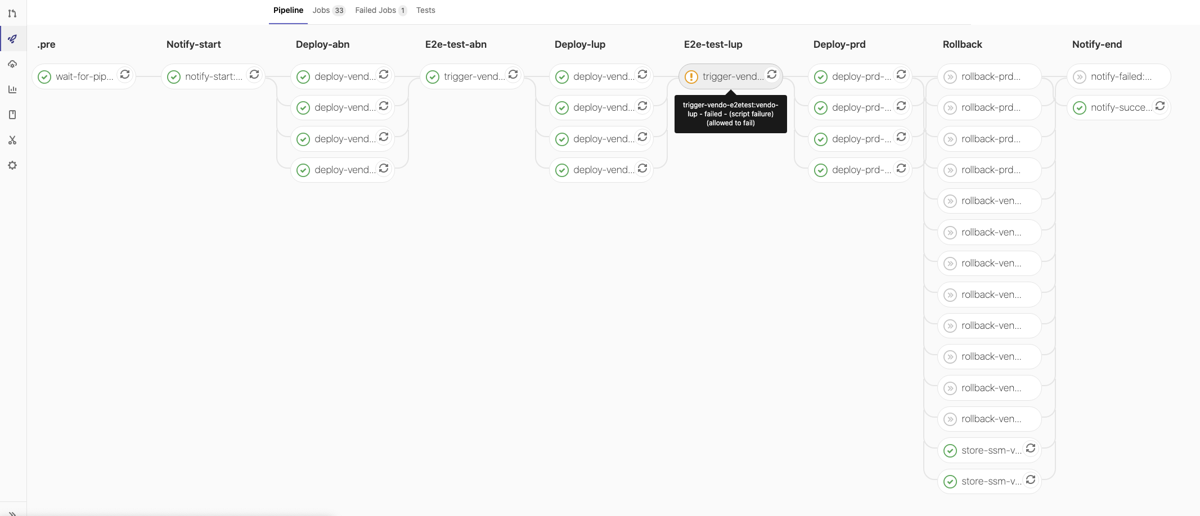

QG ‘LuP-Test’ allowed to fail, successful run:

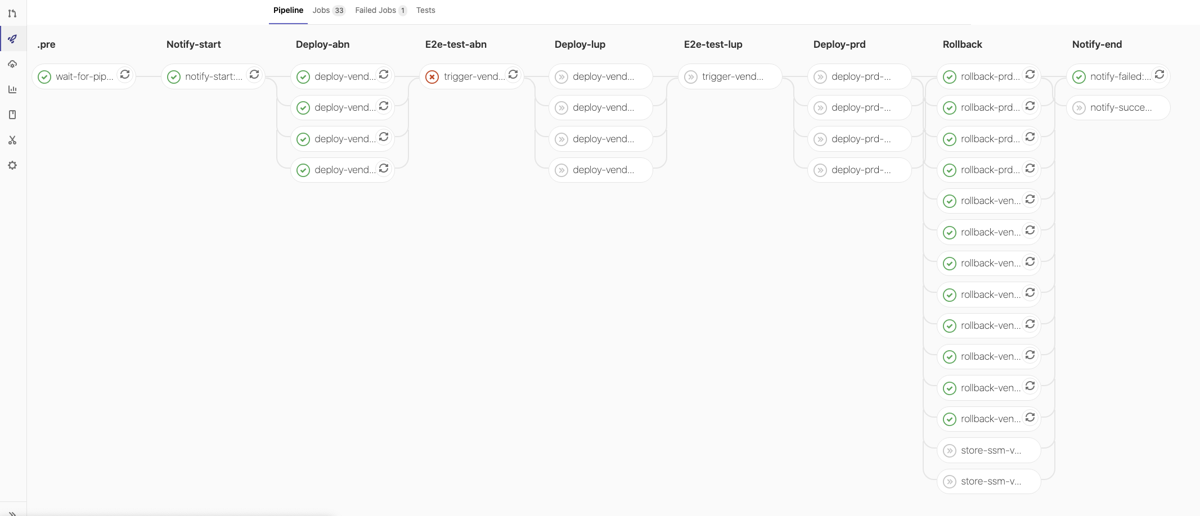

QG ‘ABN-Test’ failed, Rollback:

Demo: Spring Boot Graceful Shutdown

GitHub Repo

Description

Disposability is one of the principals described in “The Twelve Factor App” and it is a best practice for constructing cloud-native applications. It states that service instances should be disposable. And in order to achieve this, fast startup to increase scalability and graceful shutdowns to leave the system in a correct state should be favored.

This demo-project aims to demonstrate some pitfalls with graceful shutdown configuration in Spring Boot microservice deployt as a Docker container.

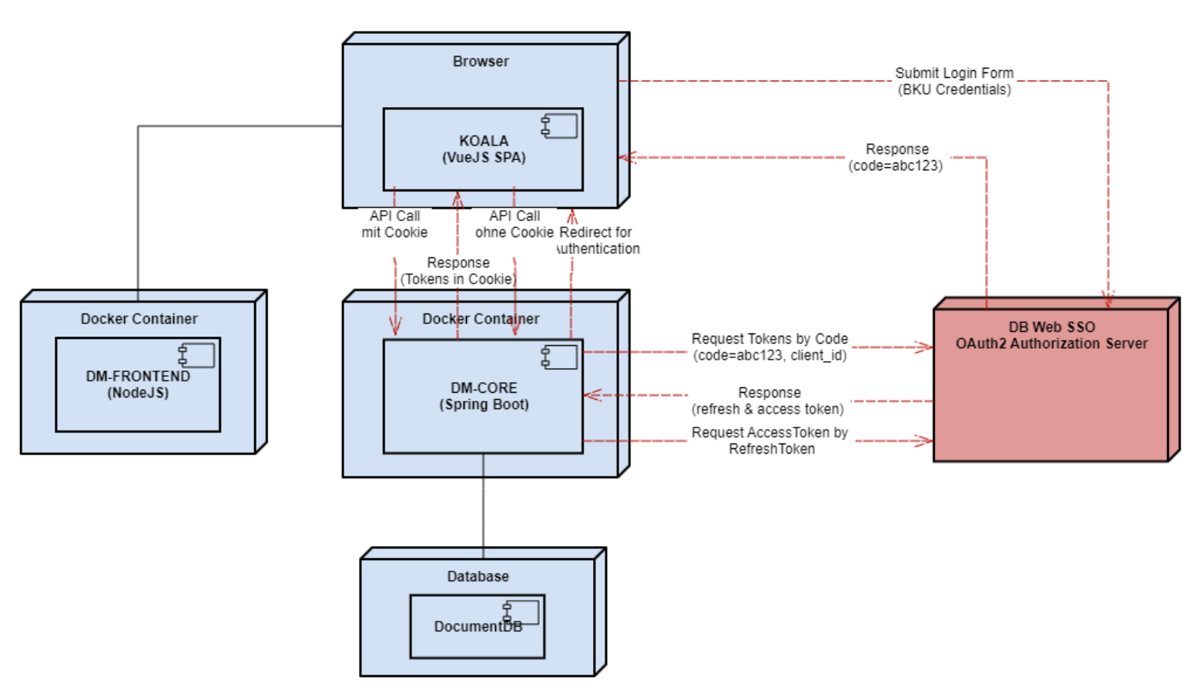

Solution Concept: SSO for SPA using OAuth2

Problem Description

Requirements and Context

- Users and roles management: define roles and rights, including the ability to maintain users according to the company’s policies.

- Authentication: provide seamless login (Single-Sign-On) functionality to the SPA for Data Developers, Data Managers and other SMEs.

- Authorization: only authorized users should be allowed to access the application’s functionality according to the specified role and granted privileges(Principle of Least Privilege)

- Architecture decision for

- Identity and Access Management (Keycloak vs company’s Active Directory)

- Authentication and Authorization protocols (SAML, OpenID Connect, OAuth2)

Solution

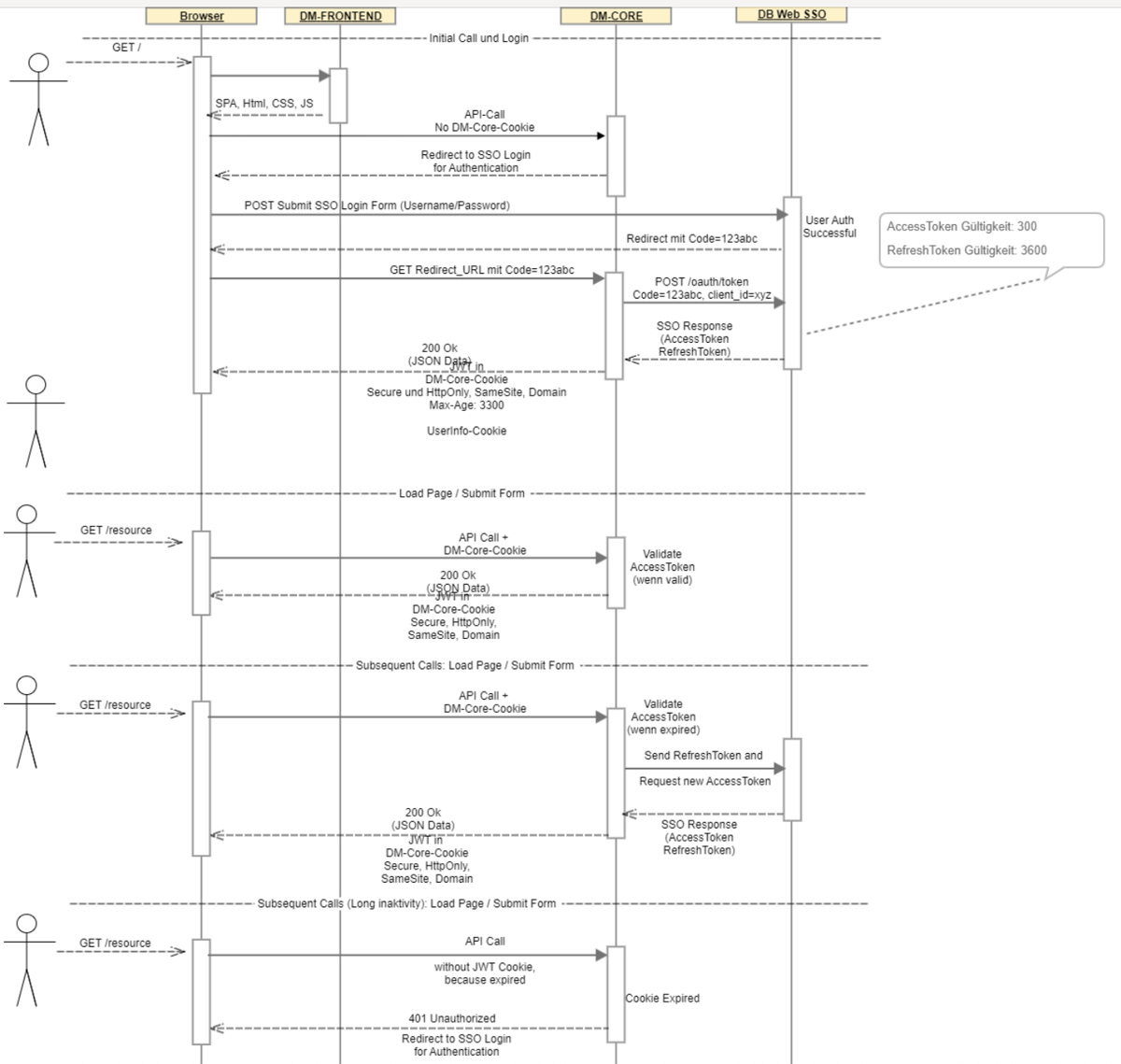

System Architecture (Component Diagram and Deployment View)

Single-Sign-On Integration using OAuth2-Code-Flow (Sequence Diagram)